F1000 - Post-publication Peer Review of the Biomedical Literature Abbreviation

F1000 Research is a new initiative on the science publishing mural – something like an commodity-hosting service with mail-publication comments. Papers that not approved past plenty commenters are rejected, in that they are no longer publicly visible. The commodity processing charges (APCs) vary between $250 and $chiliad dollars per article, with the price depending on article length.

PubMed is sufficiently impressed that they include canonical F1000 Enquiry articles in their searches, but just how thoroughly are their papers being vetted? Hither, I compare a few features of the postal service publication review procedure at F1000 Research with pre-publication peer review at four high-ranking medical journals.

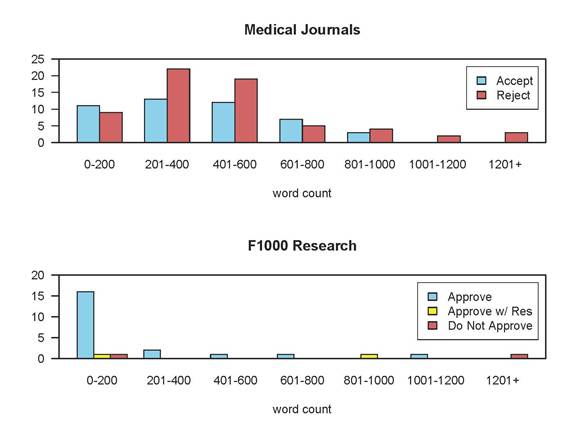

Most strikingly, commenters at F1000 Research fabricated very niggling effort to improve the paper — F1000 Research comments were typically short and positive, whereas pre-publication reviews at the medical journals were longer and more negative. The effigy shows the average word count by recommendation for the starting time review in 25 recent submissions to the medical journals (total = 100), compared to 25 from F1000 Research.

Of the 25 reviews in F1000 Enquiry, xviii were under 200 words (four had zilch words), and 21 (84%) were positive. The average length was 254 words. By dissimilarity, the average length for the medical journals was 464 words, and but 42% were positive.

Possibly the F1000 Research commenters were inexperienced, and did not understand how to write a full-length review? Not so. The average h-alphabetize of these 25 F1000 Research commenters is 24.7, which implies a long and distinguished publication record. It appears that the journal is actively encouraging the F1000 Faculty Members to provide reviews, but even when these 12 people are excluded, the average h-alphabetize of the F1000 Research commenters is still 18.7. All of these commenters know how to review a paper in the traditional pre-publication setting.

These 25 F1000 Research papers are unlikely to exist perfect, and some probably contain major flaws. These commenters would very probable take asked for more improvements had they been writing pre-publication reviews. Why did so many of them merely click "Approve" and moving ridge the paper through mostly unchanged?

First, the editorial policy of F1000 Research incentivizes short, positive comments:

Referees are asked to provide a referee status for the article ("Approved", "Approved with Reservations" or "Not Approved") and to provide comments to back up their views for the latter 2 statuses (and optionally for the "Canonical" condition).

Approving a paper is thus the path of least resistance.

Another problem facing commenters is motivation – the article'due south horse has already bolted into the public domain. Attempting to close the stable door past detailing the flaws isn't worthwhile, and the authors are unlikely to make any substantial changes in the lite of their comments (Gøtzche et al plant a similar problem for Rapid Responses at BMJ.

In add-on, writing a negative report may upset the authors. Nobody would accept an bearding comment seriously, and then the comments have to be signed. The choices for a flawed newspaper are thus be squeamish and give it an approval, or write up an honest evaluation and risk a fight. This design pressures commenters to keep their comments positive.

In pre-publication review, you know that your comments will be evaluated by a senior effigy in your field (the editor), and their opinion about you lot can affect your career. There's likewise real risk that a weak review volition get shot down in the decision letter: "I have chosen to ignore the superficial review of referee 1, and instead have based my decision on the more comprehensive and disquisitional comments of reviewers ii and three". Ouch.

There'southward no equivalent of a deciding editor at F1000 Research (iii "Approvals" are all that is required), and so while there's a risk that someone will dismiss your review, the probability that it's someone whose stance yous intendance about is much lower. It's also much less likely that a short, positive cess for F1000 Research will be the odd-1-out, as long, negative reviews are and then much less common. Lastly, in pre-publication peer review, the editor will be reluctant to recommend credence if he doesn't think the paper has been thoroughly examined, but this quality control step is absent at F1000 Research.

Another crucial betoken is how the prospect of rigorous peer review affects the motivation of authors. Knowing that their paper is likely to be scrutinized by experts in their field drives researchers to eliminate as many errors every bit possible. This motivation may be absent-minded for authors preparing to submit to F1000 Research, as information technology is readily apparent that most comments are short and positive. The combination of a lack of care from authors and lack of scrutiny from reviewers may allow badly flawed papers to get the postage of approval.

More broadly, does information technology actually matter if online commenting doesn't replicate pre-publication review? If the papers are getting "blessing" from experts in the field, does it matter that the papers all the same contain errors that might have otherwise been defenseless?

Reading and reviewing are not the same action. Reviewers typically offset with the position "this paper is flawed and should not be published" and expect the authors to convince them otherwise. Reading a newspaper is unlike, and is more than focused on how the questions and conclusions relate to your own research. The time commitment involved is also very different — a quick poll around some postdocs and senior PhD students found people spend 6-10 hours reviewing a newspaper, simply only 30-xc minutes reading an article before deciding whether to cite it in their own work.

When researchers are reading a paper, they work from the assumption that someone has gone carefully through it and helped the authors bargain with a significant proportion of the problems. This last assumption cannot safely exist fabricated for F1000 Research papers, because there is no way to know whether the "Approve" recommendations mean in that location are no errors in the paper, or that the commenters did not wait very difficult.

Responsible researchers should therefore approach every newspaper from F1000 Research every bit if it has never been through peer review, and before using it in their research they should essentially review it themselves. This imposes a new burden on the community — rather than have upwardly the fourth dimension of ii or three researchers to review the paper pre-publication, everyone has to spend more time evaluating it afterwards.

There may besides be an irony here. Authors opting to use F1000 Research to publish every bit fast as possible may find that uptake comes slower while everyone else finds fourth dimension to bank check over the article. Lingering doubts over reliability may also mean that many ignore the work altogether.

There are a few tweaks that F1000 Enquiry could brand to ease these concerns. First, their editorial lath lists many exemplary researchers, and so why not use their expertise in a more "editorial" rather than a "commenter" office? People giving casual approval to flawed papers would and so take much more reputation at stake.

Second, the editorial policy of making "approval" the path of least resistance combined with collecting APCs for approved papers means that F1000 Research flirts with predatory OA status. This is unfortunate and unnecessary. Why non switch to a submission fee model? This makes item sense for F1000 Inquiry every bit most of their services are provided pre-blessing anyway; it would crucially detach approval decisions from financial reward.

A closing thought — the progress of scientific discipline does not depend on how many manufactures are published and how quickly. Instead, progress depends on how well each publication serves equally a solid base of operations for future work. Gratifying authors with positive comments and instant publication may be a successful business organization model, but nosotros will all lose out if the ivory towers are increasingly congenital with sand.

* The journals contributing to this analysis were the New England Journal of Medicine, Breast, Radiology, and the Journal of Bone & Joint Surgery. Cheers to the staffs of those journals for their help, to Kent Anderson and Elyse Mitchell for their aid in collecting the data, and to Arianne Albert for the figure.

melbourneamigh1952.blogspot.com

Source: https://scholarlykitchen.sspnet.org/2013/03/27/how-rigorous-is-the-post-publication-review-process-at-f1000-research/

0 Response to "F1000 - Post-publication Peer Review of the Biomedical Literature Abbreviation"

Post a Comment